After the Interface: Rethinking Design for a World of AI Agents

For years, software design has been dominated by the SaaS model—dashboard-heavy, admin-focused applications packed with tables, forms, and validation flows. These CRUD apps (Create, Read, Update, Delete) are everywhere, powering businesses by letting users manage structured data.

But they come with an inherent problem: they’re expensive and complex to build. Every interaction requires a bespoke interface—custom reporting views, multi-step wizards, intricate validation rules. We, as designers and developers, have spent years crafting these systems to let users interact with their data in highly structured ways.

With AI becoming more sophisticated, I believe this paradigm is about to change. Instead of designing interfaces that let users manually input and manipulate data, we’re moving toward systems where users describe what they want to happen, and agents handle the rest. This isn’t just about chatbots—it’s a fundamental shift in how software works and how people interact with it.

The Future of Interfaces in an Agentic World

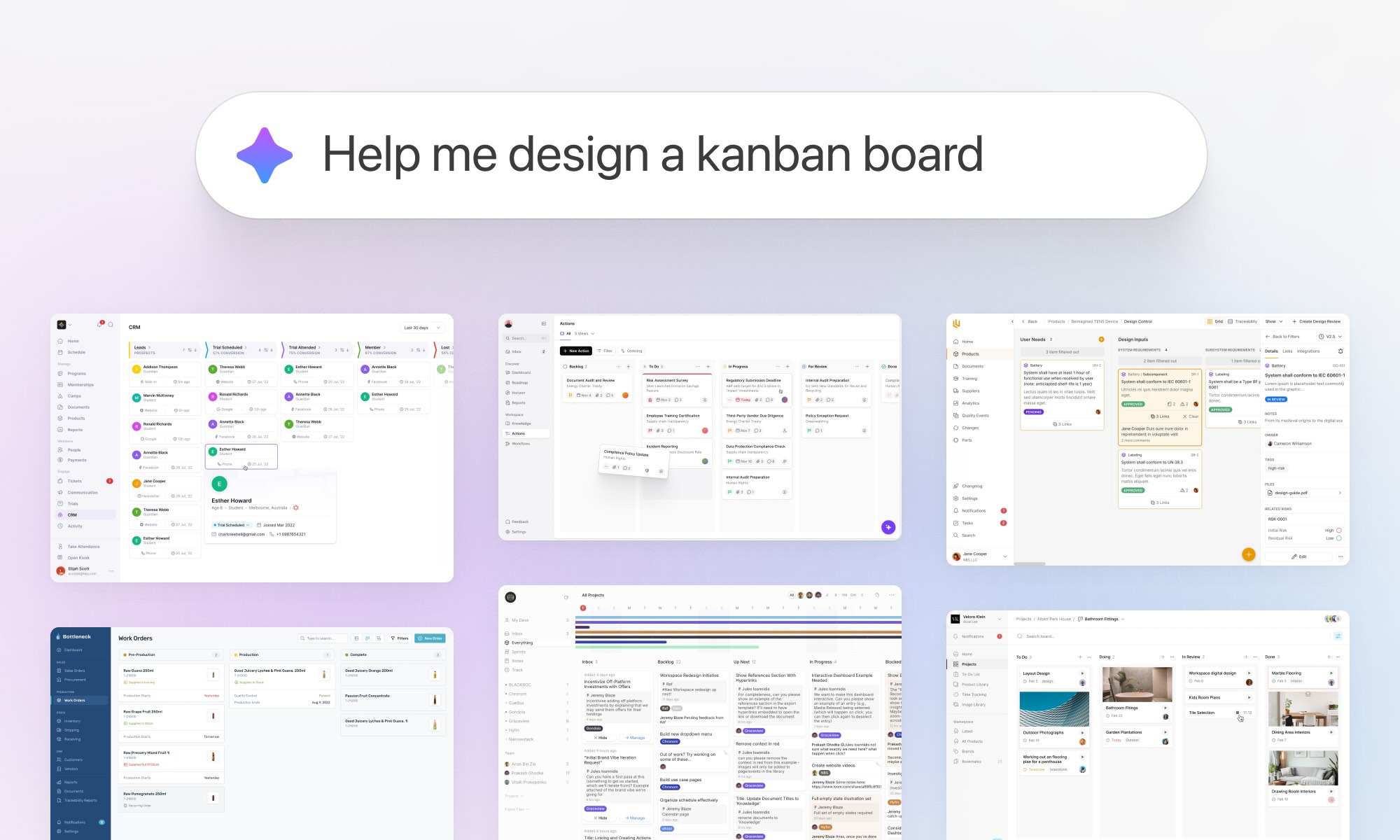

Interfaces aren’t going away—they’re just changing dramatically. As designers, we won’t be spending as much time crafting step-by-step wizards or static dashboards. Instead, we’ll be building more generalized, flexible environments that adapt to user needs in real-time.

Users will still need interfaces to interact with agents, but they won’t look like what we’ve been designing for the past decade. Instead of a fixed dashboard, applications might offer configurable workspaces, AI-generated summaries, or conversational interfaces.

Take an accounting SaaS, for example. There will still be an invoices section and a customers section—some structures will remain familiar. But these views won’t just be static lists. They’ll include AI-enhanced search that allows users to ask questions and surface insights. When viewing a customer profile or an invoice, there will still be well-designed overview pages, but right alongside them, users will have the option to interact with AI to extract key information or take suggested actions.

These shifts don’t eliminate UI—they make it more dynamic and responsive. We’re moving from designing rigid workflows to designing adaptable, AI-powered environments.

Designing for Agents: Emerging Patterns

Agentic design introduces new challenges and new interface patterns. Below, I’ll go through some of the key patterns that are already emerging and will likely define the next generation of interfaces.

1. Collaborative Workspaces

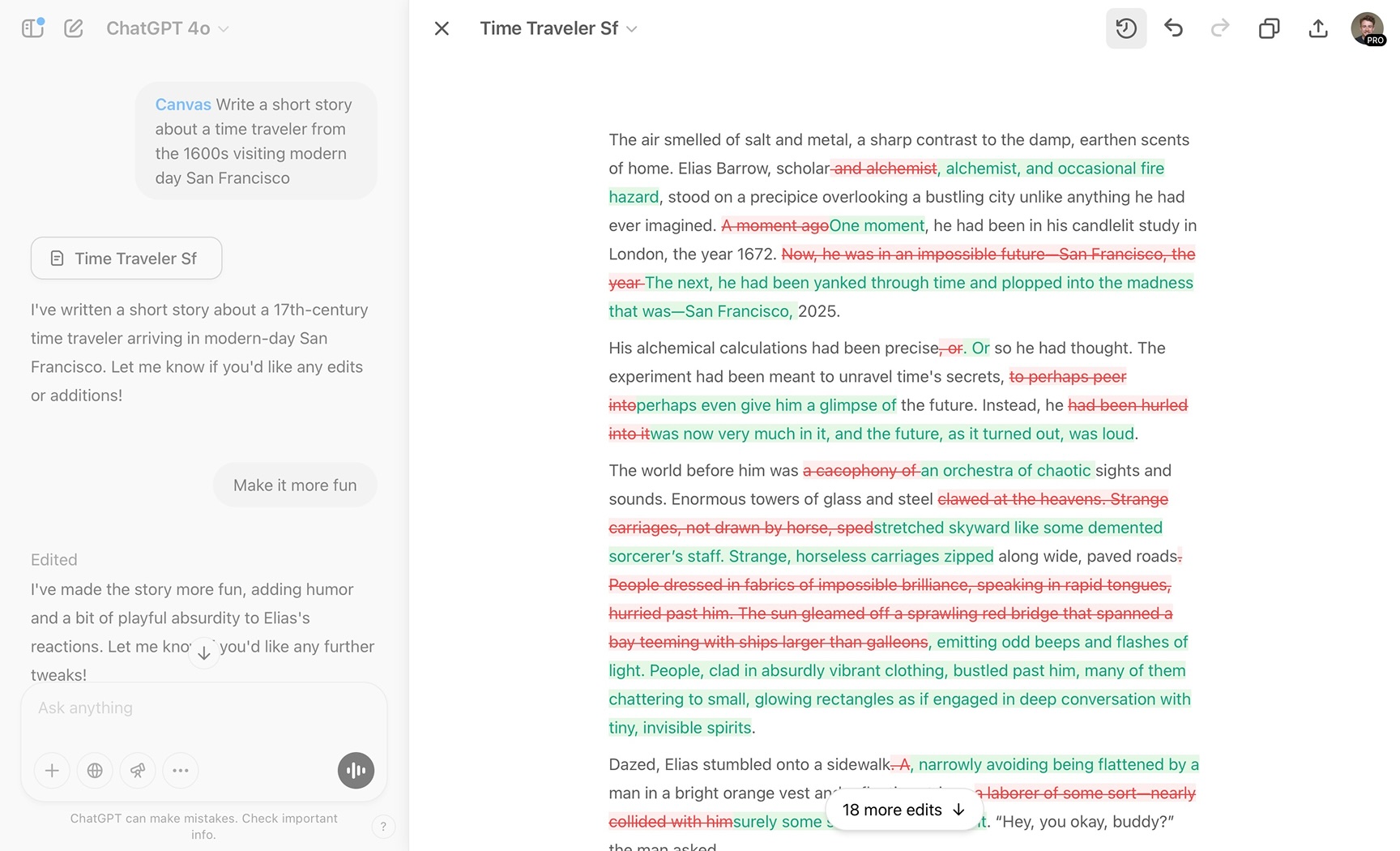

One of the biggest shifts we’re seeing is the move from form-driven interfaces to AI-assisted workspaces. Instead of rigid input fields and buttons, users collaborate with AI in a shared environment, where the system acts as a persistent assistant rather than a static tool.

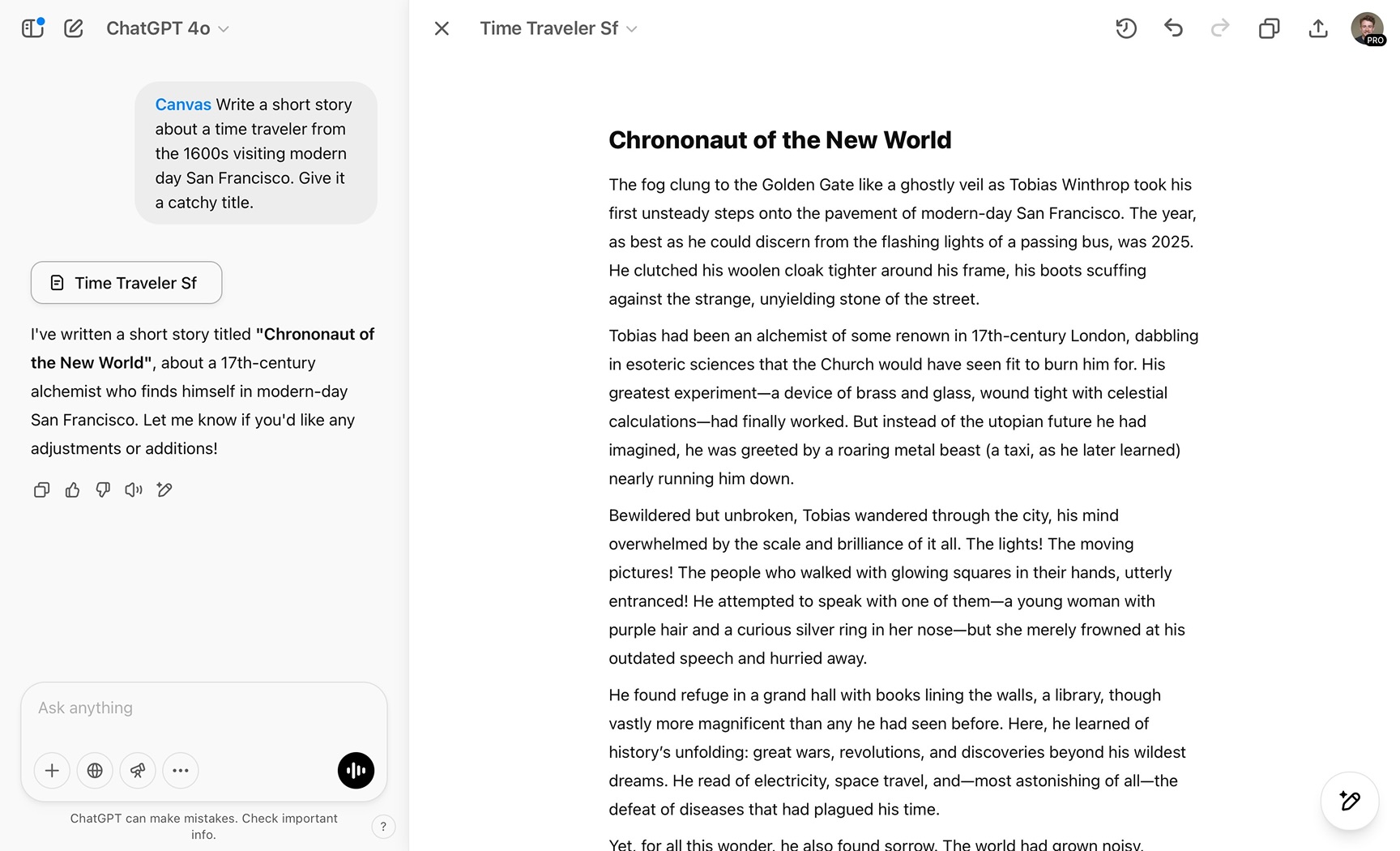

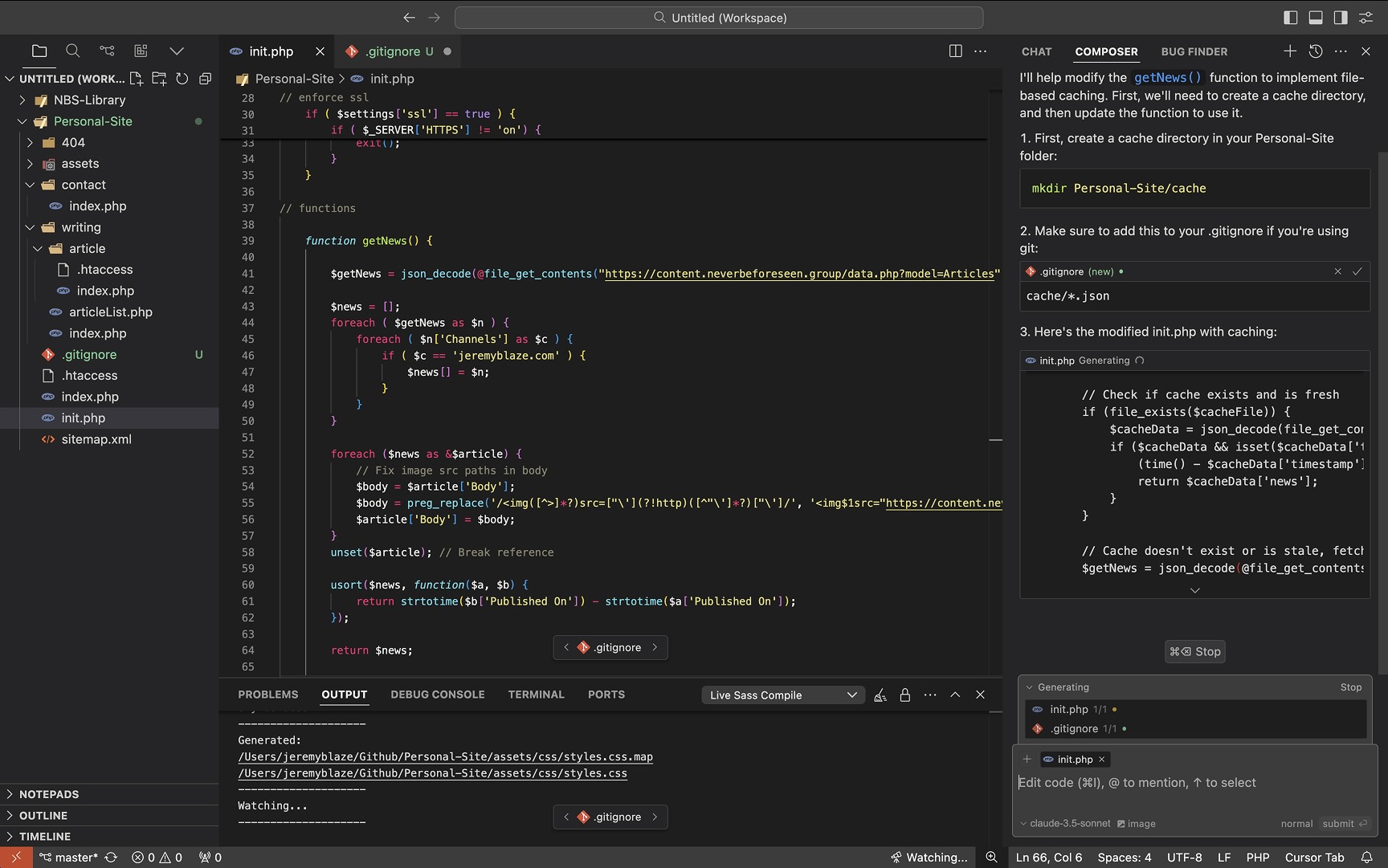

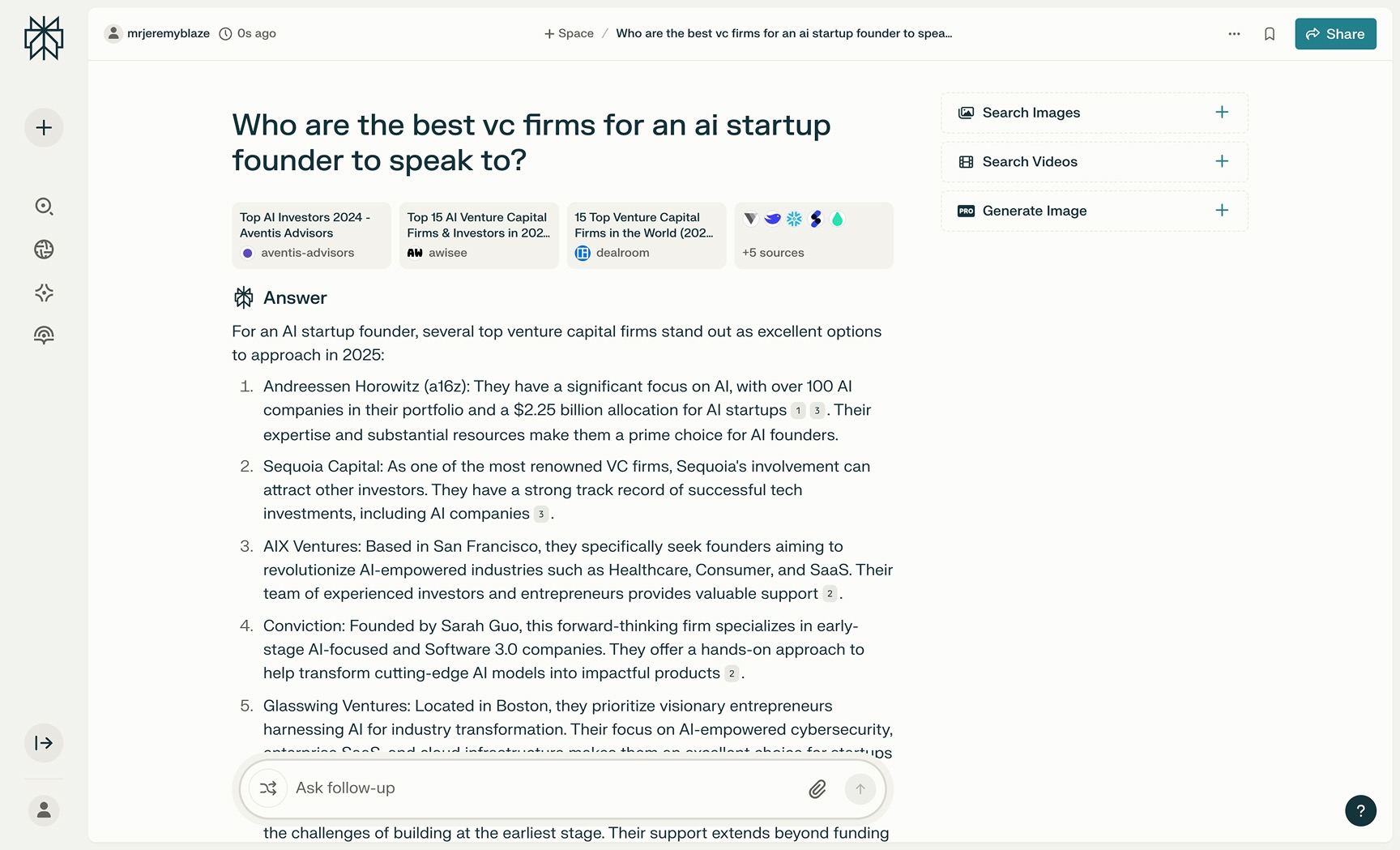

Perhaps the best examples of this currently are ChatGPT's Canvas feature, and Cursor's side panel, both of which allow seamless collaboration between AI and human where both can make changes simultaneously and the interfaces helps to communicate and resolve conflicts.

A small side note here: Multiplayer interfaces like in Google Docs or Figma, where multiple users can edit at the same time, are an obvious place to see agents working as well – essentially behaving as part of your team. Though I've not seen anyone ship this just yet.

2. Transparency, Audit Trails, and Showing Work

A core challenge of agentic design is trust. If an AI is making decisions on a user’s behalf, the system must clearly communicate what it’s doing and allow for human oversight through showing reasoning behind actions and clear audit trails of what took place.

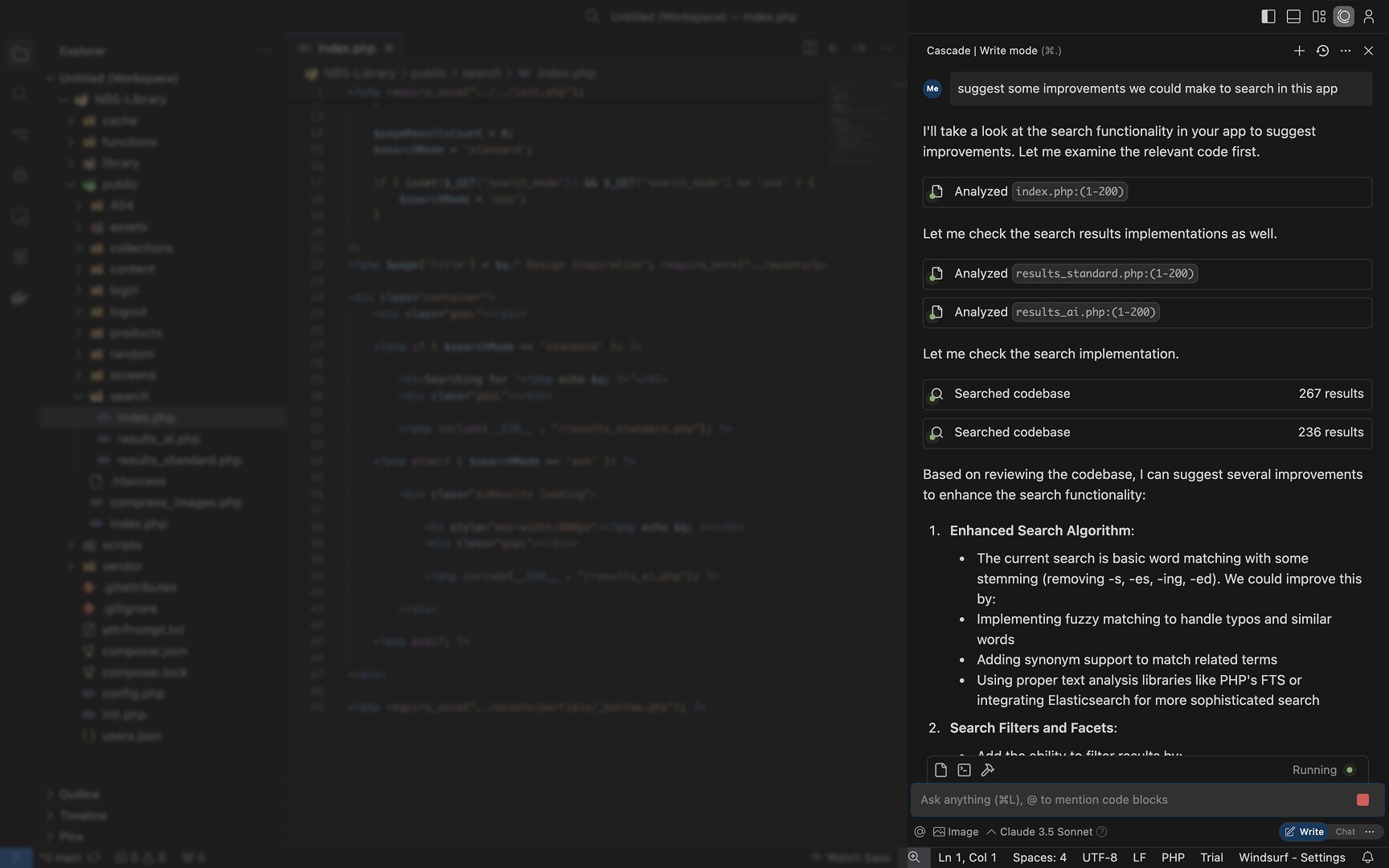

Showing Work – Interfaces must highlight AI-generated changes, similar to Windsurf's builder agent.

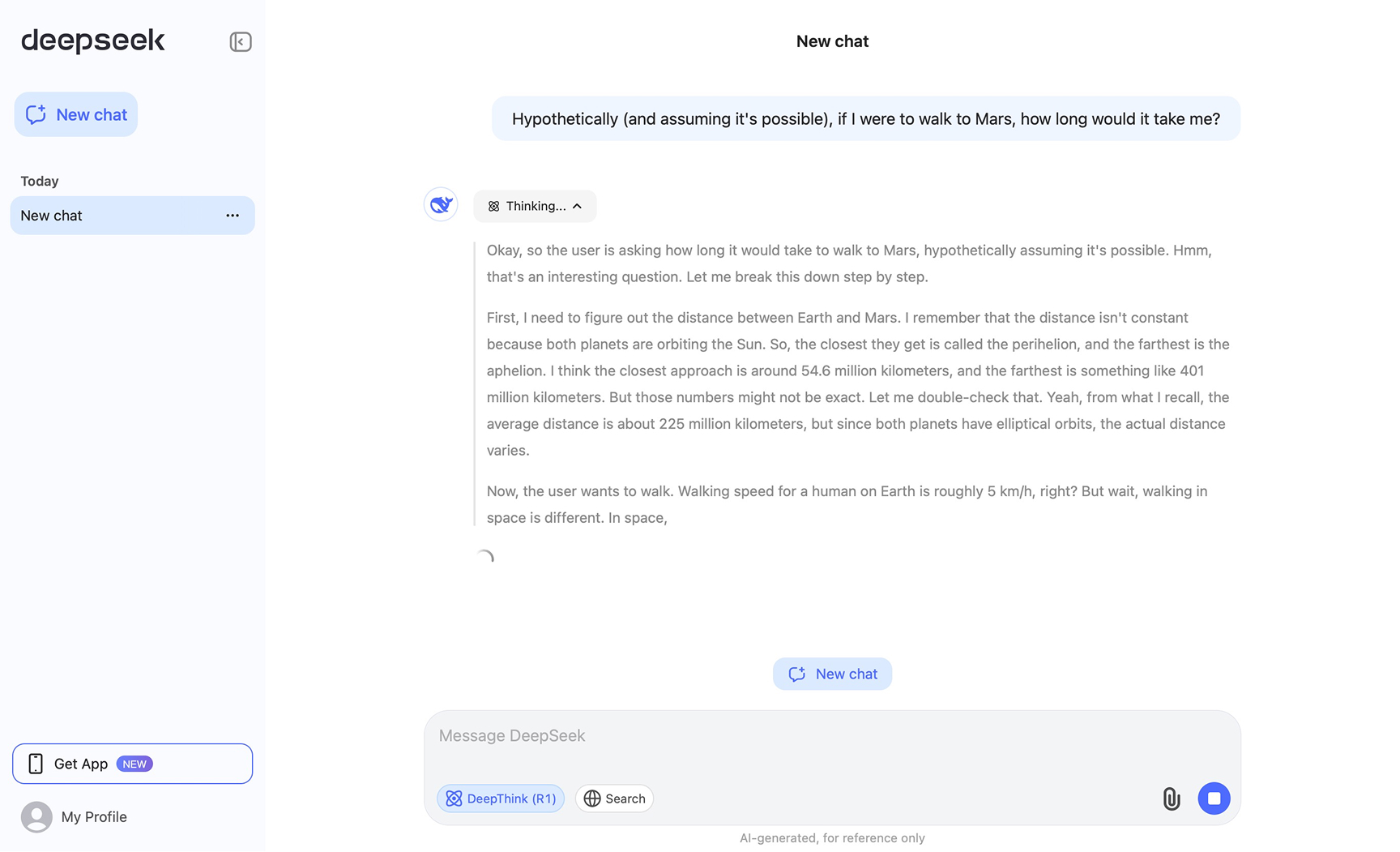

Showing Work & Reasoning – AI should visibly document the steps it took, whether scraping data, conducting research, or making structured decisions. Deepseek goes as far as explaining – at the model level – how it's approaching a given task.

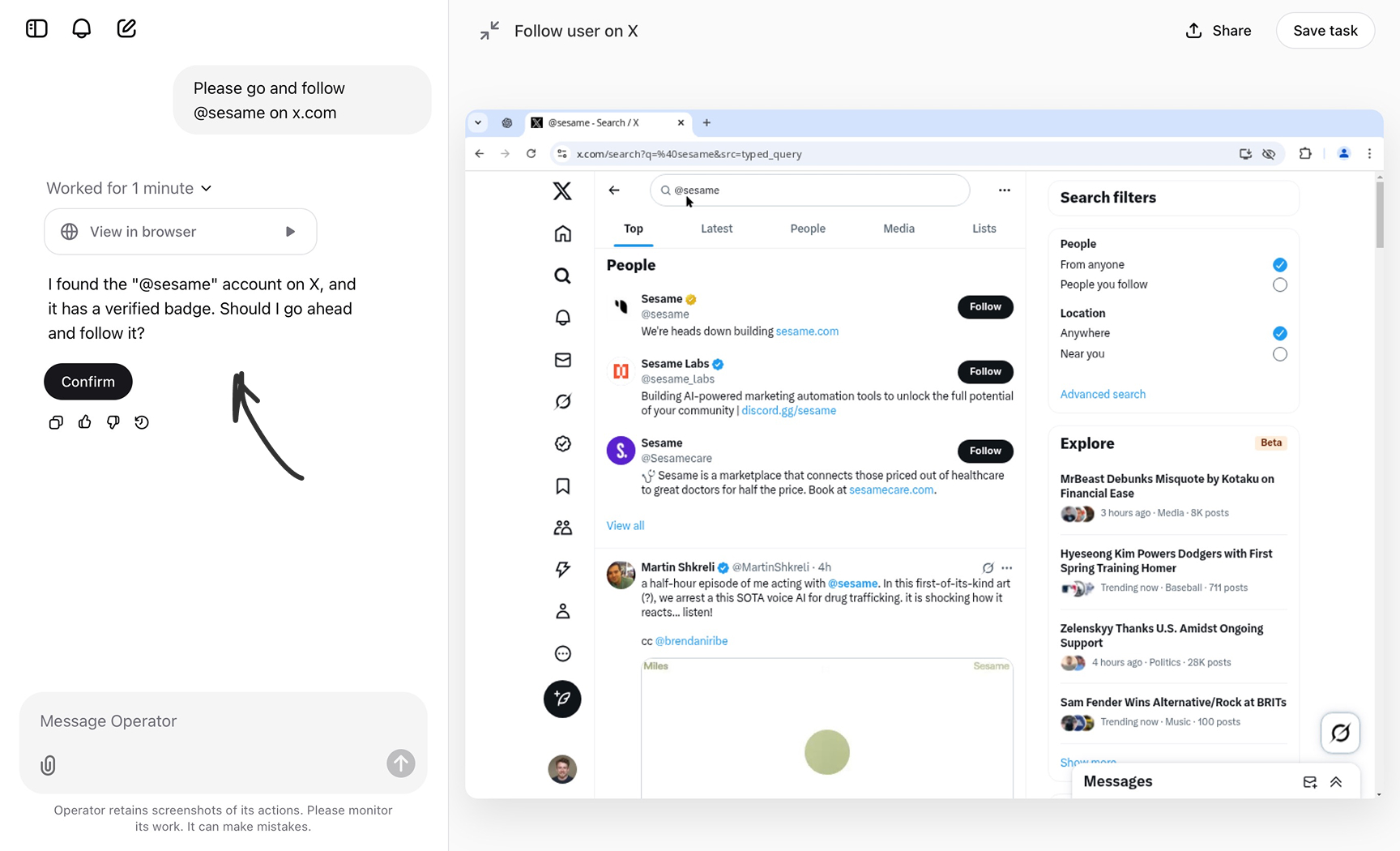

4. Immutability and Confirmatory Interfaces

AI agents should avoid taking irreversible actions without user confirmation. Many emerging designs ensure that AI systems present their proposed actions for review before executing them.

Sources/Citations – Being able to see where an agent sourced its information from (be it your own data or a web search) is critical to building trust and ensuring audit ability. RAG (Retrieval Augmented Generation) is a key component here, allowing large datasets to be utilized by an AI in a way that's fully traceable.

Confirmatory Interfaces – When browsing or automating actions, the AI stops before executing irreversible steps, requiring explicit confirmation. ChatGPT Operator for instance asks a user to confirm before it takes any critical action in a flow such as clicking a 'buy' button or in the case below, following someone on X.

Operator coined some interesting new interaction patterns including the ability to 'take control' for the AI. I highly recommend trying it out.

Version Control & History – Storing past versions of records and being able to easily roll back changes made by AI ensures when inevitable errors occur, either a human or agent can rectify them.

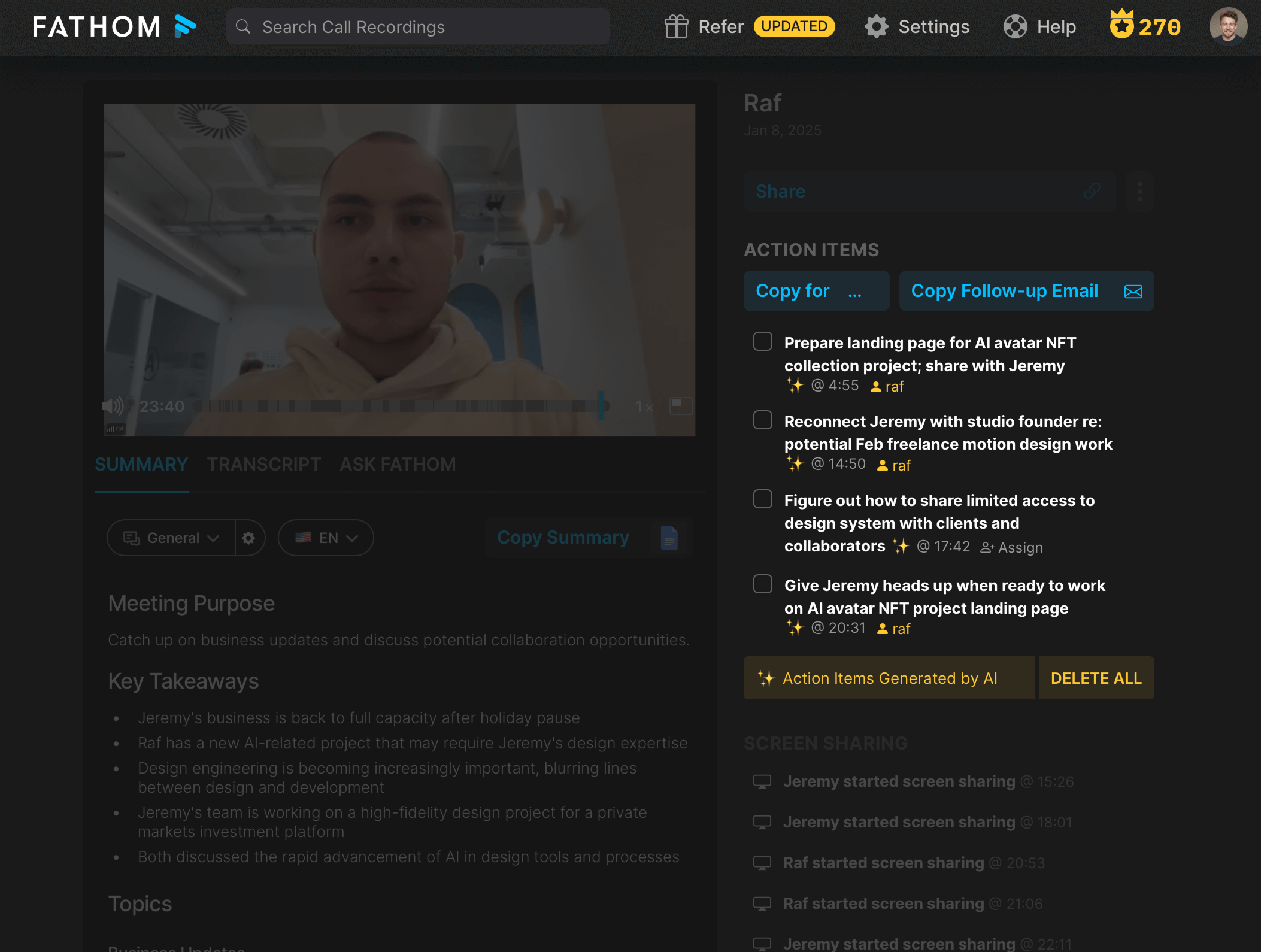

5. Task and Notification Interfaces for Human Oversight

As agents become more proactive, users need structured ways to review AI-generated tasks and take action. It may be that tasks can be assigned to Agents but it's also likely that the AI will be unable to do some, so may assign humans to them autonomously.

Task Lists created by AI – Instead of users manually working through processes, AI agents generate structured task lists for both Agents and humans to work on. Fathom and other meeting recorders are already creating lists of 'Action Items' for users to work on

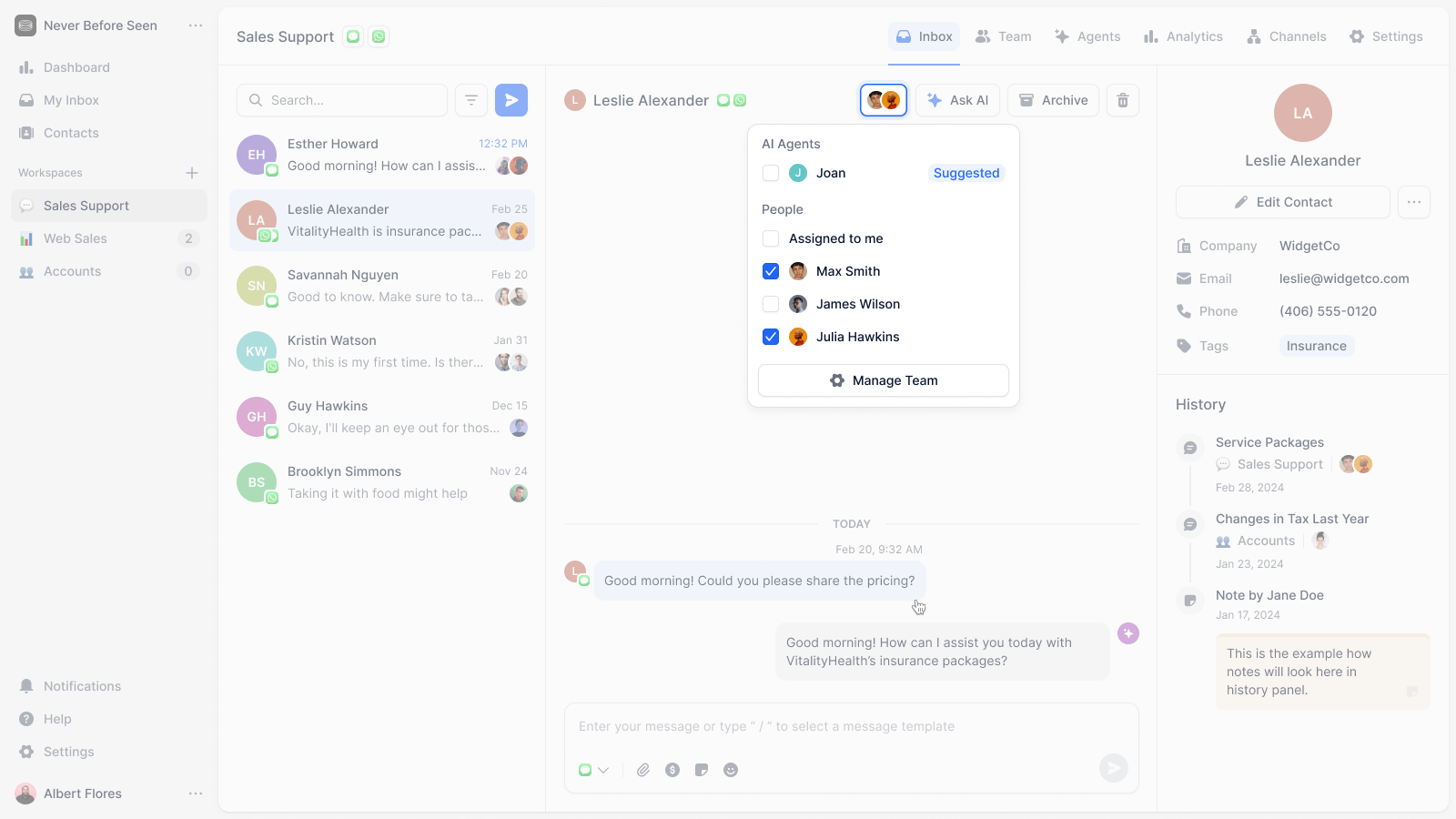

Autonomous assignment – For Converso, a customer support startup, we blurred the lines between Agents and human operators, and allowed either to be assigned to any conversation.

6. Digests & Summaries

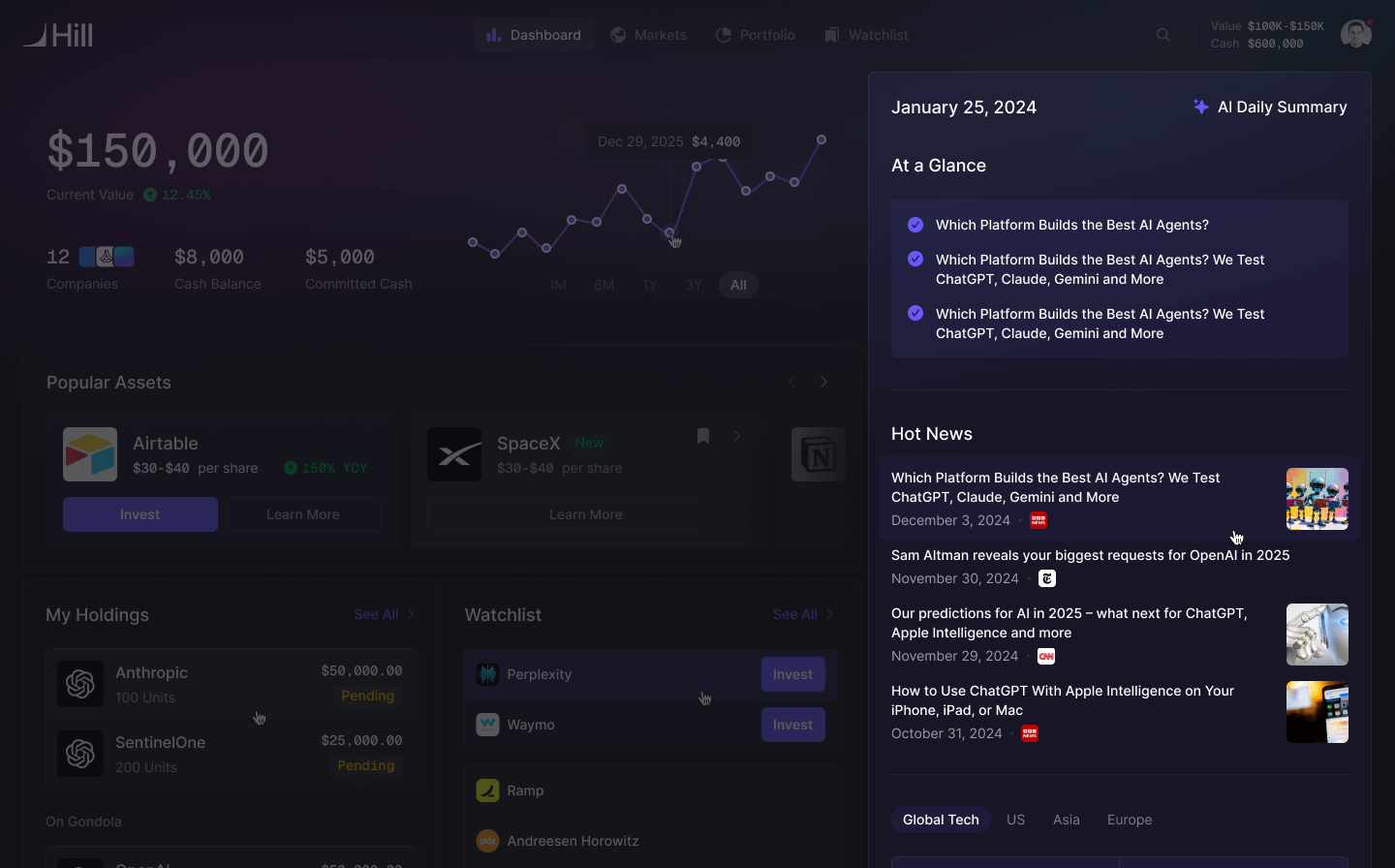

For seeing need-to-know info, the default has long been the Dashboard. Another pattern I expect we will see more is AI-driven dashboards and summaries of what's been happening while you were away. Below you can see one we designed for hill.com which updates users on market changes and important news from the past day.

Where This Is Headed

The shift from traditional SaaS interfaces to agentic design is already underway. As AI systems improve, we’re going to see less reliance on rigid, manually-driven CRUD applications and more emphasis on dynamic, AI-assisted workflows. This will require an entirely new approach to design—one that prioritizes collaboration, transparency, and trust over static UI elements.

For designers, this means rethinking everything. How do we communicate AI intent? How do we make sure users stay in control? How do we build interfaces that feel like working with a competent assistant rather than fighting against an unpredictable system?

The future of interface design isn’t about more polished forms and dashboards. It’s about designing environments where humans and AI collaborate seamlessly.

If you have any ideas or suggestions for more patterns we should be looking into or adding to this article you can reach me on X @mrjeremyblaze

Introducing the all new Marathon – the tool for people who live in Figma

First Make Them Care: The $50B Communication Gap Between Experts and Success

AI Is Offering A New First Step To The Mental Health Journey

Hill Has Been Accepted Into The HF0 Residency

Joining Hill As Head of Product

We turned 10 years of product design expertise into the ultimate AI design copilot

Breaking into UX & Product Design: A Guide for New Designers

Adding 'flexible features' to uncover new product opportunities

Introducing Blair, the companion to help you conquer your day

Pushing Through Creative Block: Learning from Artists and Musicians to Reignite Inspiration

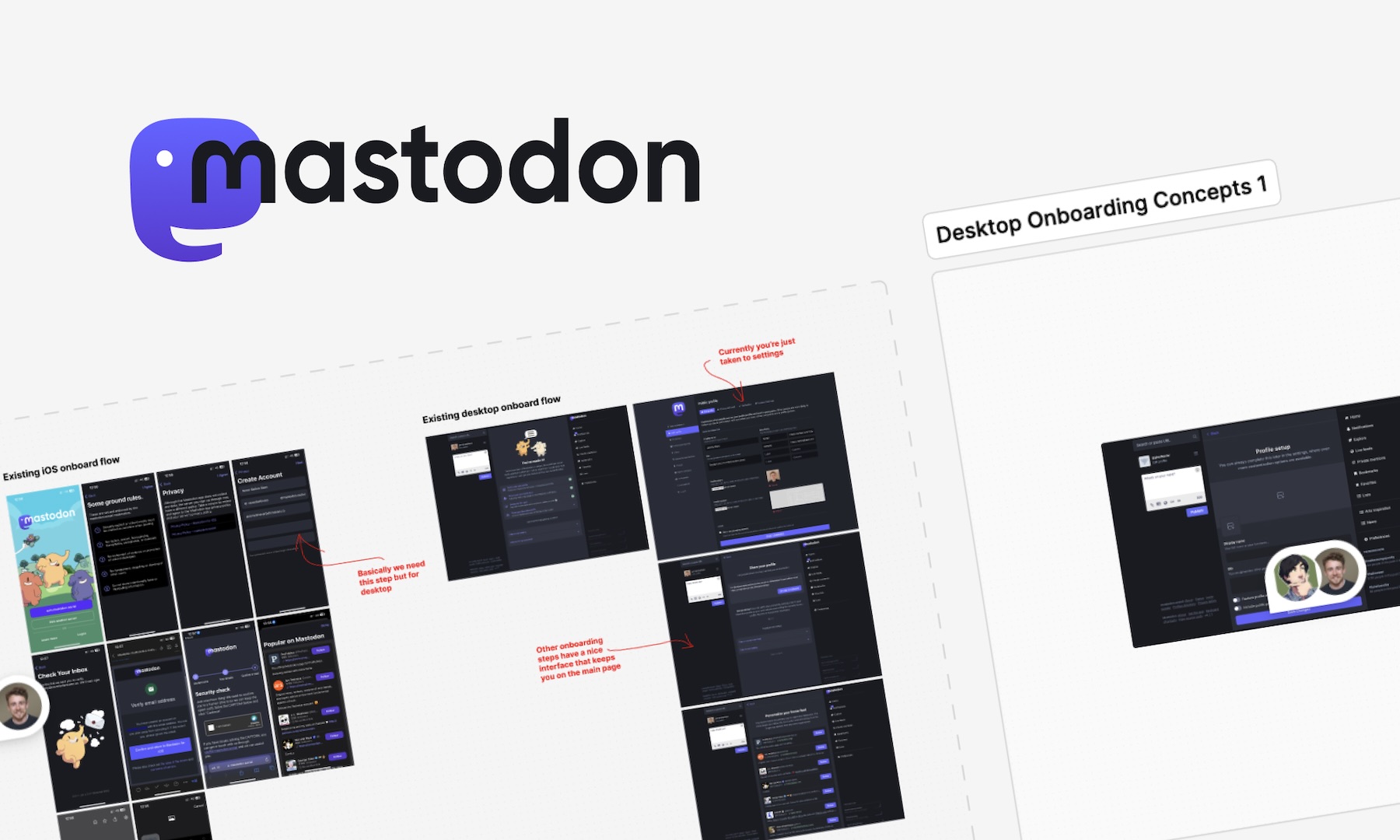

We helped Eugen Rochko, founder of Mastodon, fix their onboarding experience

Unlocking Airbnb’s Secret: How SaaS Founders Can Aim For 10 Stars And Win

How to avoid MVP feature creep: The ICE Method

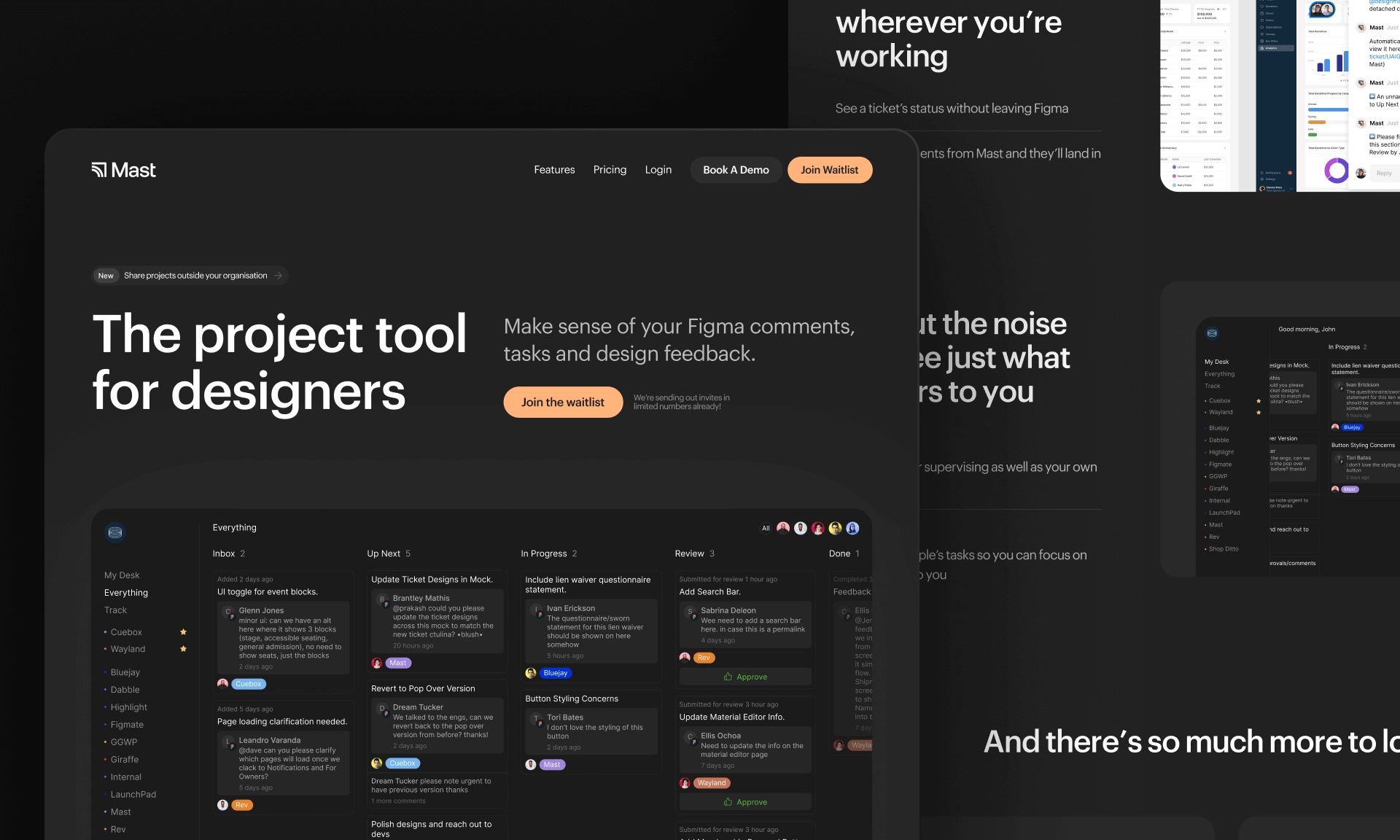

Introducing Mast: The project tool for design teams

Reflecting on my time running a 100-person creative community in the heart of Melbourne

Starting a company during a global pandemic, with Jeremy Blaze on Funemployed

Introducing NOOK: Digital Patron Check-In & Menus to Keep Australia's Hospitality Industry Afloat

Why I’ve started a product design studio

Case Study: Making sense of LIVE carpark availability in UbiPark

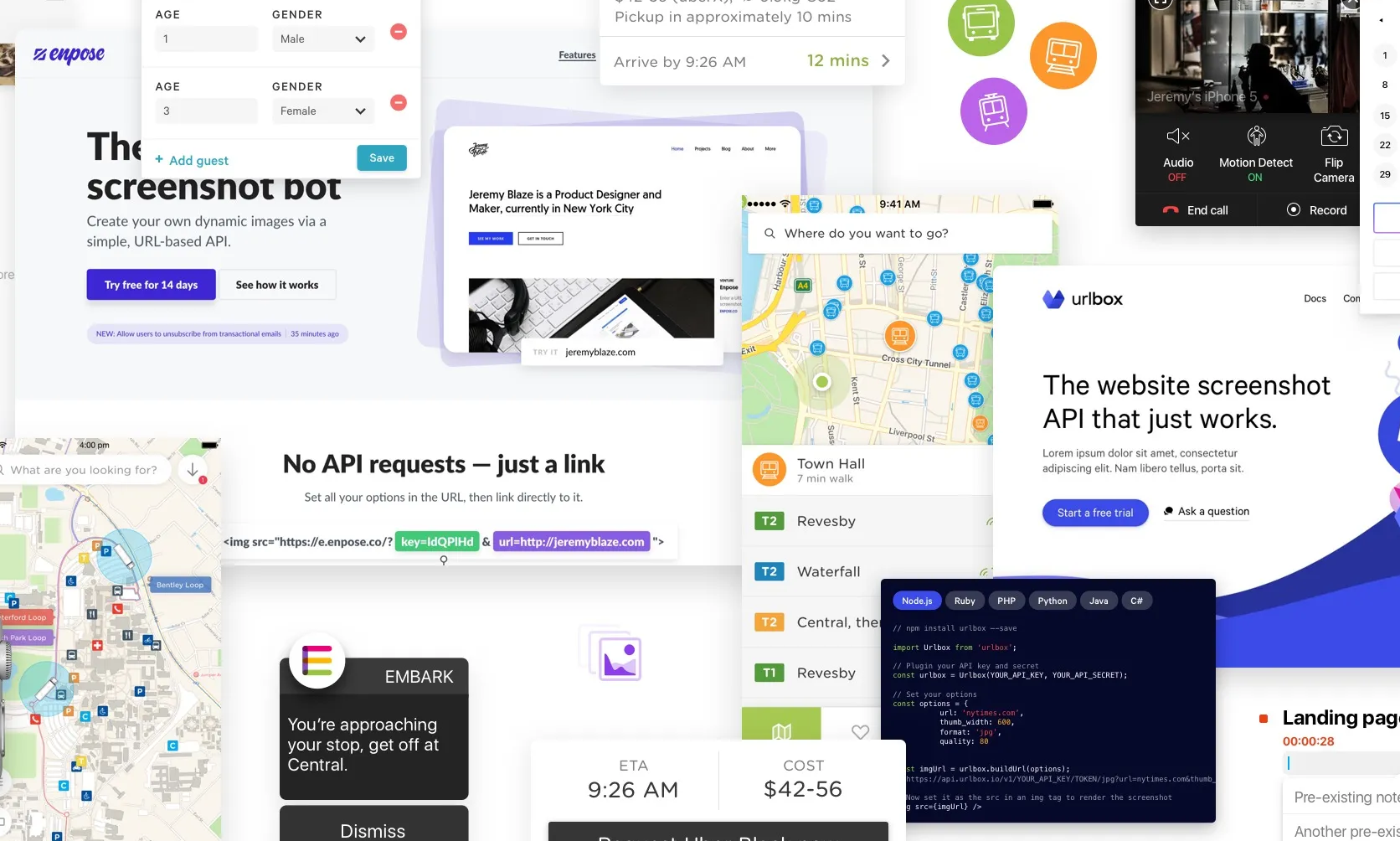

Case Study: Embark’s design, launch & early iteration

Enpose has been acquired by ApiLayer, solidifying their position in the screenshot API market

An exercise in reductive design: Building a beautiful API